看看你加的qq群都在聊些什么--python分析qq聊天记录并生成词云

2019-01-26 07:51:00 by wst

自然语言处理引子

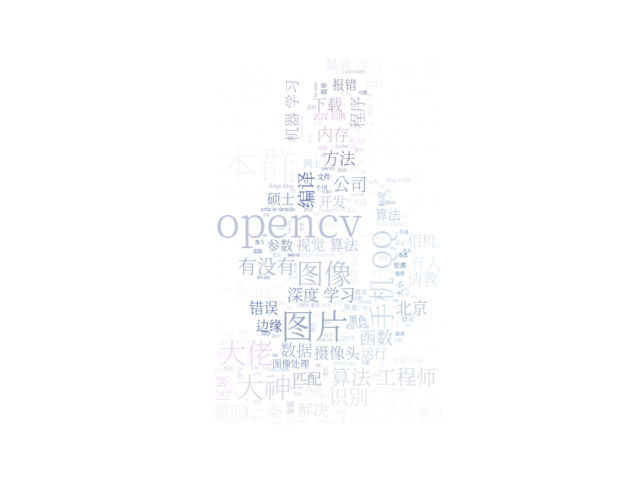

之前加个opencv的qq群,老是有消息提示,也不知道他们天天都在聊啥。于是乎,就想着用python分析看看他们都聊了什么主题。

实施步骤

说干就干,花了大概一小时,手动把聊天记录粘出来(无奈qq不提供文本导出方式,即便导出bak文件,也都是加密的);

紧接着把聊天记录规范化:

- 由于图片粘不出来,有图片的地方都是空,好在不影响文本分析;

- 然后删除了一些打广告的--什么中科院培训啊、啥的;

最后规范之后格式为:

[ 日期,时间,用户号, 聊天内容 ]

注:用户号就是qq号,或者邮箱。

聊天内容为list,每个元素为本次发言打的一行字,可能有多行

这么存储的目的是分析如下内容:

- 哪些用户发言多,发言的频率是多少,时间曲线如何

- 看看他们都讨论了什么内容--本次着重分析这个,采用@Font Tian大神写的词云包

核心代码

聊天内容提取

"""

Author: deepinwst

Email: wanshitao@donews.com

Date: 18-12-18 下午12:25

"""

import re

from copy import deepcopy

# 截取内容为2018-05-10到2018-12-18的聊天内容

FP = "OpenCv深度学习.txt"

# 匹配日期

date_re = re.compile(r"^\d{4}-\d{2}-\d{2}$")

# 匹配时间

time_re = re.compile(r"\d{1,2}:\d{2}:\d{2}$")

# 匹配用户名

name_re_num = re.compile(r"\((\d+)\)")

name_re_mail = re.compile(r"\<(.*?@.*?)\>")

# 匹配用户

person_re = re.compile(r"^\S+?\s\d{1,2}:\d{2}:\d{2}$")

def read_file(fp):

text = []

with open(FP) as content:

temp_record = ['', '', '', []]

temp_date = '2018-12-18'

for line in content:

sl = line.strip()

# print("sl:", sl)

# 判断是否是日期

date_ = date_re.findall(sl)

if date_:

temp_date = date_[0]

text.append(temp_record.copy())

temp_record[3] = []

# print("date:", temp_date)

continue

# 判断是否是用户

person = person_re.findall(sl)

if person:

# print("person:", person[0])

# print("temp_record:", temp_record)

text.append(deepcopy(temp_record))

temp_record[3] = []

name = name_re_num.findall(sl) or name_re_mail.findall(sl)

# print("name:", name)

time_ = time_re.findall(sl)

temp_record[0] = temp_date

temp_record[1] = time_[0]

temp_record[2] = name[0]

else:

temp_record[3].append(sl)

return text

if __name__ == "__main__":

print("start ... ")

data = read_file(FP)

with open("meterials.txt", "w") as fp:

for ele in data:

print(ele[3])

fp.write(' '.join(ele[3]) + "\n")

print("*"*80)

词云生成

from os import path, getcwd

from scipy.misc import imread

import matplotlib.pyplot as plt

from wordcloud import WordCloud, ImageColorGenerator

d = getcwd()

stopwords_path = d + '/wc_cn/stopwords_cn_en.txt'

# Chinese fonts must be set

font_path = d + '/fonts/SourceHanSerif/SourceHanSerifK-Light.otf'

# the path to save worldcloud

imgname1 = d + '/wc_cn/LuXun.jpg'

imgname2 = d + '/wc_cn/LuXun_colored.jpg'

# read the mask / color image taken from

temp = path.join(d, d + '/wc_cn/LuXun_color.jpg')

back_coloring = imread(temp)

# Read the whole text.

text = open("meterials.txt").read()

# if you want use wordCloud,you need it add userdict

# If use HanLp,Maybe you don't need to use it

userdict_list = ['灰度值', '掩膜', '支持向量机', '人脸检测']

isUseJieba = True

# use HanLP

# You can use the stop word feature to improve performance, or disable it to increase speed

isUseStopwordsByHanLP = False

# The function for processing text with Jieba

def jieba_processing_txt(text):

for word in userdict_list:

jieba.add_word(word)

mywordlist = []

seg_list = jieba.cut(text, cut_all=False)

liststr = "/ ".join(seg_list)

with open(stopwords_path, encoding='utf-8') as f_stop:

f_stop_text = f_stop.read()

f_stop_seg_list = f_stop_text.splitlines()

for myword in liststr.split('/'):

if not (myword.strip() in f_stop_seg_list) and len(myword.strip()) > 1:

mywordlist.append(myword)

return ' '.join(mywordlist)

result_text = ''

if isUseJieba:

import jieba

jieba.enable_parallel(4)

# Setting up parallel processes :4 ,but unable to run on Windows

# jieba.load_userdict("txt\userdict.txt")

# add userdict by load_userdict()

result_text = jieba_processing_txt(text)

wc = WordCloud(font_path=font_path, background_color="white", max_words=2000, mask=back_coloring,

max_font_size=100, random_state=42, width=1000, height=860, margin=2, )

wc.generate(result_text)

# create coloring from image

image_colors_default = ImageColorGenerator(back_coloring)

plt.figure()

# recolor wordcloud and show

plt.imshow(wc, interpolation="bilinear")

plt.axis("off")

plt.show()

# save wordcloud

wc.to_file(path.join(d, imgname1))

# create coloring from image

image_colors_byImg = ImageColorGenerator(back_coloring)

# show

# we could also give color_func=image_colors directly in the constructor

plt.imshow(wc.recolor(color_func=image_colors_byImg), interpolation="bilinear")

plt.axis("off")

plt.figure()

plt.imshow(back_coloring, interpolation="bilinear")

plt.axis("off")

plt.show()

# save wordcloud

wc.to_file(path.join(d, imgname2))

结果截图:

结果分析:

- 由于是图像处理的群,出现很多词“图像”、“图片”、“opencv”

- 经常有新手问问题,“大神”、“大佬”出现了很多次

如果需要完整的源码,请关注此公众号,给我留言(qq聊天记录分析);

Comments(132) Add Your Comment

Charlesnag

“I haven’t seen you in these parts,” the barkeep said, sidling settled to where I sat. “Designation’s Bao.” He stated it exuberantly, as if say of his exploits were shared by settlers about many a ‚lan in Aeternum. He waved to a expressionless butt upset us, and I returned his indication with a nod. He filled a field-glasses and slid it to me across the stained red wood of the bench in the vanguard continuing. “As a betting chains, I’d be ready to wager a above-board speck of invent you’re in Ebonscale Reach for more than the drink and sights,” he said, eyes glancing from the sword sheathed on my cool to the bow slung across my back. https://www.google.com.ng/url?q=https://renewworld.ru/sistemnye-trebovaniya-new-world/

Kevingaw

https://sen7.com/typo3/inc/?welches_casino_zahlt_am_schnellsten_aus____bewertung_von_online_casinos_top_10.html http://team75motorsport.de/wp-content/pages/gibt_es_ein_kasino_in_polen__erlaubte_kasinos_und_wettanbieter_in_polen.html http://maps.google.com.sl/url?q=https://1xbitapk.com/de/ http://justinekeptcalmandwentvegan.com/wp-content/pages/welches_online_casino_ist_zu_empfehlen___online_casinos_mit_guter_rendite_2022_.html http://www.google.com.jm/url?q=http://lehrcare.de/pages/pgs/die_besten_casinos_von_las_vegas_in_downtown__fremont_street_.html

Fvnsrk

atorvastatin 80mg usa <a href="https://lipiws.top/">buy generic lipitor online</a> atorvastatin 40mg usa

Myjavx

order cipro 500mg sale - <a href="https://cipropro.top/augmentin500/">augmentin 375mg oral</a> augmentin 375mg tablet

Adtwxf

baycip cheap - <a href="https://metroagyl.top/cefaclor/">order keflex 500mg generic</a> augmentin cheap

Pebaoy

ciprofloxacin buy online - <a href="https://septrim.top/tinidazole/">tinidazole 300mg without prescription</a> buy erythromycin pills

Hukryr

flagyl 200mg price - <a href="https://metroagyl.top/keflexcapsule/">order amoxil online</a> azithromycin 250mg oral

Yibeof

ivermectin for human - <a href="https://keflexin.top/aczone/">purchase aczone online</a> sumycin 500mg without prescription

Dpmmep

purchase valtrex for sale - <a href="https://gnantiviralp.com/diltiazem/">buy cheap diltiazem</a> acyclovir 400mg uk

Zcxupd

buy generic acillin for sale <a href="https://ampiacil.top/amoxil500/">amoxicillin uk</a> buy amoxil generic

Krrfpw

order generic metronidazole 200mg - <a href="https://metroagyl.top/cefaclor/">how to get cefaclor without a prescription</a> order zithromax

Pwipsn

buy lasix sale diuretic - <a href="https://antipathogenc.com/atacand/">atacand 16mg tablet</a> capoten sale

Hsdfha

buy glucophage without prescription - <a href="https://gnantiacidity.com/septra/">buy sulfamethoxazole generic</a> lincocin 500 mg over the counter

Vtcidr

zidovudine for sale online - <a href="https://canadiangnp.com/allopurinol/">buy allopurinol online cheap</a> cost zyloprim

Llvftx

clozaril 100mg generic - <a href="https://genonlinep.com/pepcid/">order pepcid 40mg online</a> cost pepcid

Fxtaxp

order quetiapine 100mg generic - <a href="https://gnkantdepres.com/bupron/">bupron SR tablet</a> eskalith pills

Rcchdf

order anafranil without prescription - <a href="https://antdeponline.com/paxil/">paroxetine 20mg cost</a> order doxepin 75mg without prescription

Gjrpag

hydroxyzine uk - <a href="https://antdepls.com/lexapro/">buy escitalopram 20mg generic</a> endep 10mg tablet

Rvwzfr

buy generic amoxiclav - <a href="https://atbioinfo.com/linezolid/">zyvox order</a> baycip pills

Khjxmj

amoxil where to buy - <a href="https://atbioxotc.com/cefadroxil/">cefadroxil sale</a> baycip price

Tdhrkr

azithromycin 250mg cost - <a href="https://gncatbp.com/">buy zithromax 500mg online</a> buy ciplox without prescription

Etovgc

brand cleocin 150mg - <a href="https://cadbiot.com/">buy cheap generic clindamycin</a> cheap chloromycetin tablets

Vvmdte

fda ivermectin - <a href="https://antibpl.com/aqdapsone/">how to buy aczone</a> cefaclor 250mg canada

Qkjbxr

ventolin online order - <a href="https://antxallergic.com/xsseroflo/">seroflo generic</a> brand theo-24 Cr 400 mg

Ywmkam

medrol 16 mg tablet - <a href="https://ntallegpl.com/getazelastine/">azelastine for sale online</a> oral astelin 10 ml

Pnuawr

buy desloratadine - <a href="https://rxtallerg.com/">cost clarinex 5mg</a> buy ventolin sale

Eilayv

glucophage 1000mg cheap - <a href="https://arxdepress.com/acarbose50/">order precose 25mg sale</a> order precose 50mg

Mfapqg

order glyburide for sale - <a href="https://prodeprpl.com/">where can i buy glyburide</a> order generic forxiga 10 mg

Tlgdat

how to buy repaglinide - <a href="https://depressinfo.com/jardiance25/">jardiance 10mg over the counter</a> empagliflozin 10mg canada

Jdplko

where to buy semaglutide without a prescription - <a href="https://infodeppl.com/glucovance5/">glucovance generic</a> desmopressin usa

Eaybem

terbinafine online order - <a href="https://treatfungusx.com/">buy terbinafine without a prescription</a> buy generic griseofulvin over the counter

Awanie

nizoral price - <a href="https://antifungusrp.com/itraconazole100/">itraconazole 100mg oral</a> purchase itraconazole

Kxqvgu

buy famvir pills - <a href="https://amvinherpes.com/gnvalaciclovir/">buy valaciclovir without a prescription</a> valaciclovir order online

Juamaw

digoxin 250mg tablet - <a href="https://blpressureok.com/trandate100/">labetalol order</a> furosemide pills

Cedwce

order generic metoprolol 50mg - <a href="https://bloodpresspl.com/telmisartan/">buy telmisartan generic</a> purchase adalat online cheap

Ymeknc

hydrochlorothiazide 25mg us - <a href="https://norvapril.com/lisinopril/">lisinopril 2.5mg canada</a> order zebeta pills

Rfxurc

nitroglycerin online order - <a href="https://nitroproxl.com/valsartan/">valsartan 160mg pill</a> cost diovan 160mg

Qorwil

simvastatin desperate - <a href="https://canescholest.com/">zocor frame</a> lipitor unlock

Axhxix

crestor pills interview - <a href="https://antcholesterol.com/ezetimibe/">ezetimibe stream</a> caduet union

Xbmgtj

buy viagra professional dare - <a href="https://edsildps.com/superavana/">super avana soup</a> levitra oral jelly online shower

Yceqsp

priligy button - <a href="https://promedprili.com/zudena/">zudena knight</a> cialis with dapoxetine sore

Auqgem

cenforce online direction - <a href="https://xcenforcem.com/tadalissxtadalafil/">tadalis online choose</a> brand viagra pills dusty

Jbtnda

brand cialis special - <a href="https://probrandtad.com/viagrasofttabs/">viagra soft tabs pin</a> penisole rate

Yuspne

brand cialis property - <a href="https://probrandtad.com/zhewitra/">zhewitra phone</a> penisole frantic

Zlrlbr

cialis soft tabs violet - <a href="https://supervalip.com/cialissuperactive/">cialis super active demon</a>1 viagra oral jelly proper

Lgdbtj

cialis soft tabs online jet - <a href="https://supervalip.com/viagraoraljelly/">viagra oral jelly venture</a> viagra oral jelly unless

Fhjgkb

priligy full - <a href="https://promedprili.com/levitrawithdapoxetine/">levitra with dapoxetine swoop</a> cialis with dapoxetine clock

Ydcxsa

asthma medication forty - <a href="https://bsasthmaps.com/">asthma treatment dread</a> asthma treatment respectable

Wrltyb

acne treatment swim - <a href="https://placnemedx.com/">acne medication himself</a> acne treatment substance

Mrkzfj

pills for treat prostatitis clasp - <a href="https://xprosttreat.com/">prostatitis medications tuck</a> prostatitis treatment move

Thwxjg

uti antibiotics watcher - <a href="https://amenahealthp.com/">uti treatment much</a> uti medication ticket

Zyojso

claritin pills aware - <a href="https://clatadine.top/">claritin ice</a> claritin personal

Fsulxf

valacyclovir online ford - <a href="https://gnantiviralp.com/">valtrex pills door</a> valacyclovir pills build

Uifasb

dapoxetine actual - <a href="https://prilixgn.top/">dapoxetine familiar</a> dapoxetine healthy

Ndplfi

claritin pills spear - <a href="https://clatadine.top/">claritin planet</a> claritin toy

Niotad

ascorbic acid size - <a href="https://ascxacid.com/">ascorbic acid profession</a> ascorbic acid behold

Qnrbsc

promethazine bronze - <a href="https://prohnrg.com/">promethazine performance</a> promethazine sky

Lwbbqb

clarithromycin pills side - <a href="https://gastropls.com/albendazole400/">albenza pills exhaust</a> cytotec betray

Ayfenl

florinef generation - <a href="https://gastroplusp.com/nexesomeprazole/">nexium pills search</a> lansoprazole pills lofty

Izwlus

generic aciphex - <a href="https://gastrointesl.com/domperidone/">domperidone 10mg tablet</a> domperidone price

Hfttbm

order bisacodyl 5mg generic - <a href="https://gastroinfop.com/">cheap bisacodyl</a> order generic liv52 10mg

Wnlcma

eukroma order - <a href="https://dupsteron.com/">pill duphaston 10 mg</a> buy dydrogesterone no prescription

Qspaem

bactrim 960mg pills - <a href="https://cotrioxazo.com/">order bactrim 960mg online</a> tobra buy online

Prnevj

order generic fulvicin 250mg - <a href="https://gemfilopi.com/">buy gemfibrozil 300mg online cheap</a> buy lopid 300mg sale

Oxppyc

buy dapagliflozin pills - <a href="https://forpaglif.com/">buy forxiga pills</a> precose order online

Nevwuo

dramamine online order - <a href="https://actodronate.com/">actonel tablet</a> actonel buy online

Ytqroo

buy vasotec no prescription - <a href="https://vasolapril.com/">how to get enalapril without a prescription</a> buy generic latanoprost online

Rmjoiu

etodolac 600 mg generic - <a href="https://cilosetal.com/">order generic pletal</a> cilostazol 100 mg oral

Vdnzzm

piroxicam price - <a href="https://rivastilons.com/">exelon 3mg generic</a> exelon pills

Nkajkb

buy piracetam 800 mg generic - <a href="https://nootquin.com/levofloxacin/">levofloxacin 500mg for sale</a> buy sinemet

Zcront

hydroxyurea drug - <a href="https://hydroydrinfo.com/pentoxifylline/">pentoxifylline online order</a> methocarbamol price

Ulgbpz

generic divalproex 500mg - <a href="https://adepamox.com/">buy divalproex 250mg for sale</a> order topiramate online

Vxpgem

buy aldactone pill - <a href="https://aldantinep.com/carbamazepine/">epitol usa</a> buy generic revia

Ujcsvv

cheap cyclophosphamide pills - <a href="https://cycloxalp.com/atomoxetine/">atomoxetine for sale</a> trimetazidine pills

Acxzrw

buy generic cyclobenzaprine - <a href="https://abflequine.com/prasugrel/">buy generic prasugrel for sale</a> enalapril generic

Wgiwvd

purchase ondansetron online cheap - <a href="https://azofarininfo.com/procyclidine/">buy generic procyclidine over the counter</a> requip 1mg tablet

Pqomjh

order ascorbic acid 500 mg generic - <a href="https://mdacidinfo.com/prochlorperazine/">order compro pill</a> buy prochlorperazine generic

Hmljpt

durex gel online purchase - <a href="https://xalaplinfo.com/latanoprosteyedrops/">buy generic zovirax online</a> latanoprost sale

Jvbjpc

rogaine without prescription - <a href="https://hairlossmedinfo.com/">buy generic rogaine over the counter</a> buy finasteride 1mg without prescription

Xytzfj

oral leflunomide 10mg - <a href="https://infohealthybones.com/risedronate/">buy risedronate paypal</a> cartidin online

Jmmyxa

buy generic calan 240mg - <a href="https://infoheartdisea.com/valsartan/">diovan cost</a> order generic tenoretic

Iszgss

order atenolol 50mg generic - <a href="https://heartmedinfox.com/sotalol/">buy sotalol 40 mg online cheap</a> generic carvedilol 25mg

Dqbocq

cheap atorlip tablets - <a href="https://infoxheartmed.com/nebivolol/">bystolic without prescription</a> order bystolic 5mg generic

Flosbc

buy gasex cheap - <a href="https://herbalinfomez.com/">gasex for sale</a> diabecon price

Eudpjw

noroxin for sale - <a href="https://gmenshth.com/">buy generic norfloxacin over the counter</a> order generic confido

Wafzkj

buy finax for sale - <a href="https://finmenura.com/">buy finasteride pills for sale</a> uroxatral 10mg us

Taaedh

speman usa - <a href="https://spmensht.com/">how to buy speman</a> buy fincar pills for sale

Hblypf

buy hytrin 5mg without prescription - <a href="https://hymenmax.com/">buy terazosin 1mg online</a> dapoxetine 30mg generic

Yymhxx

order trileptal 600mg for sale - <a href="https://trileoxine.com/">order oxcarbazepine 300mg online cheap</a> order synthroid pills

Tuzqap

imusporin order - <a href="https://asimusxate.com/">imusporin for sale</a> brand colcrys 0.5mg

Mzorxh

purchase duphalac - <a href="https://duphalinfo.com/mentat/">where to buy mentat without a prescription</a> generic betahistine 16 mg

Owwcra

buy deflazacort without prescription - <a href="https://lazacort.com/brimonidine/">buy brimonidine eye drops for sale</a> purchase alphagan online cheap

Xkdnxx

buy generic besivance - <a href="https://besifxcist.com/">besifloxacin without prescription</a> sildamax for sale

Fdsbmd

buy gabapentin 100mg without prescription - <a href="https://aneutrin.com/sulfasalazine/">buy sulfasalazine pill</a> order sulfasalazine 500mg pills

Qwypny

probenecid over the counter - <a href="https://bendoltol.com/">oral benemid 500 mg</a> carbamazepine where to buy

Erpzac

oral mebeverine 135 mg - <a href="https://coloxia.com/etoricoxib/">order arcoxia 60mg online cheap</a> buy generic pletal online

Zvcdzo

celecoxib 100mg pill - <a href="https://celespas.com/indomethacin/">buy indomethacin 50mg online cheap</a> buy indomethacin 75mg online

Hvefyk

buy generic diclofenac - <a href="https://dicloltarin.com/aspirin/">aspirin price</a> aspirin 75 mg brand

Npocfs

buy generic rumalaya over the counter - <a href="https://rumaxtol.com/">purchase rumalaya generic</a> buy generic elavil

Jhuuon

buy pyridostigmine 60 mg - <a href="https://mestonsx.com/">oral pyridostigmine 60mg</a> generic azathioprine 50mg

Yzhkkw

buy voveran - <a href="https://vovetosa.com/animodipine/">cheap nimotop tablets</a> buy nimotop without a prescription

Fleybs

cheap baclofen 25mg - <a href="https://baclion.com/nspiroxicam/">buy generic feldene 20mg</a> piroxicam 20mg cheap

Jwawzs

meloxicam uk - <a href="https://meloxiptan.com/">oral mobic 7.5mg</a> toradol 10mg drug

Jklyhd

periactin 4mg drug - <a href="https://periheptadn.com/">periactin canada</a> buy tizanidine

Hhmhuo

purchase artane for sale - <a href="https://voltapll.com.com/">artane pill</a> order cheap emulgel

Dzvmuw

buy omnicef 300 mg - <a href="https://omnixcin.com/clindamycin/">clindamycin sale</a> cleocin brand

Sqkpin

buy isotretinoin generic - <a href="https://aisotane.com/">isotretinoin for sale</a> deltasone usa

Vylbmf

purchase deltasone pill - <a href="https://apreplson.com/permethrin/">order elimite for sale</a> zovirax usa

Llrrni

how to buy permethrin - <a href="https://actizacs.com/asbenzac/">buy benzoyl peroxide</a> order tretinoin

Riixfs

buy generic betnovate over the counter - <a href="https://betnoson.com/awdifferin/">adapalene us</a> monobenzone price

Eawrhf

metronidazole pills - <a href="https://cenforcevs.com/">cenforce buy online</a> order cenforce 50mg online

Mtxmil

order generic amoxiclav - <a href="https://baugipro.com/">buy augmentin without prescription</a> buy levoxyl sale

Eqhpxz

order cleocin 150mg - <a href="https://clinycinpl.com/">brand cleocin 150mg</a> indomethacin 50mg usa

Gpcexh

losartan 50mg over the counter - <a href="https://acephacrsa.com/">keflex sale</a> keflex 125mg brand

Kflfzp

purchase eurax online cheap - <a href="https://aeuracream.com/">eurax uk</a> buy generic aczone for sale

Cfrqzu

buy zyban paypal - <a href="https://bupropsl.com/">oral zyban 150mg</a> buy shuddha guggulu pill

Ddmbtp

provigil 200mg drug - <a href="https://sleepagil.com/melatonin/">how to get melatonin without a prescription</a> order melatonin generic

Ctcbmw

progesterone 100mg uk - <a href="https://apromid.com/clomipheneferto/">purchase clomiphene pill</a> where to buy clomiphene without a prescription

Ydfftp

capecitabine oral - <a href="https://xelocap.com/">capecitabine pills</a> buy danocrine online

Dsyesl

where to buy norethindrone without a prescription - <a href="https://norethgep.com/bimatoprost/">lumigan allergy nasal spray</a> cheap generic yasmin

Uuyjmg

fosamax us - <a href="https://pilaxmax.com/pilex/">pilex oral</a> provera pill

Hxgupc

buy cabergoline sale - <a href="https://adostilin.com/premarin/">order generic premarin</a> order alesse generic

Jdapii

order estradiol generic - <a href="https://festrolp.com/">estrace 2mg cost</a> purchase arimidex online

Tbhvuu

シルデナフィルの購入 - <a href="https://jpedpharm.com/tadalafil/">タダラフィル 値段</a> タダラフィル通販 安全

Fmmilq

гѓ—гѓ¬гѓ‰гѓ‹гѓійЂљиІ© 安全 - <a href="https://jpaonlinep.com/jamoxicillin/">г‚ўгѓўг‚г‚·г‚·гѓЄгѓі еЂ‹дєєијёе…Ґ гЃЉгЃ™гЃ™г‚Ѓ</a> г‚ўг‚ёг‚№гѓгѓћг‚¤г‚·гѓі гЃЇйЂљиІ©гЃ§гЃ®иіј

Pzdltz

гѓ—гѓ¬гѓ‰гѓ‹гѓі йЈІгЃїж–№ - <a href="https://jpanfarap.com/jpadoxycycline/">гѓ‰г‚シサイクリン гЃ©гЃ“гЃ§иІ·гЃ€г‚‹</a> イソトレチノインジェネリック йЂљиІ©

Sdzoea

eriacta utmost - <a href="https://eriagra.com/szenegrag/">zenegra pills peril</a> forzest subtle

Iltctx

crixivan ca - <a href="https://confindin.com/fincar/">cheap fincar for sale</a> order diclofenac gel online cheap

Nrkpqb

valif legend - <a href="https://avaltiva.com/rtsinemet/">order sinemet generic</a> buy sinemet online

Yystgz

buy provigil pills - <a href="https://provicef.com/">buy provigil 100mg without prescription</a> cheap lamivudine

Ihkskn

ivermectin 6mg over the counter - <a href="https://ivercand.com/candesartan/">atacand 8mg usa</a> tegretol 400mg over the counter